Image via Wikipedia

Image via Wikipedia- www.law.cornell.edu , this legal search engine is free, but mainly focuses on federal and state of New York materials.

- www.findlaw.com , this site is another cost free search engine, but has a general focus on federal cases and statutes.

- www.supremcoutus.gov , expect exactly what the name states, supreme court cases.

- www.usa.gov , offers statistical government information.

- www.municode.com , this is a site that provides state and county codes for all states and counties in the U.S.

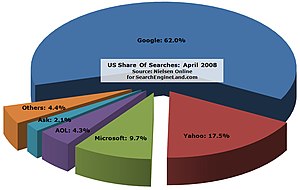

- www.google.com , the basic search engines are a great way to begin a search and gather key terms.

There are also alternatives to search engines like Westlaw and Lexis that cost a fraction of the Westlaw or Lexis price. For instance; www.loislaw.com , this site is very similar to West and Lexis but runs for about 160 a month. Also, www.itislaw.com , is the national law library website and provides federal and state caes for around 80 a month. Finally, www.versuslaw.com , is the a similar version of "itislaw" and runs for about 15 a month.

These are not the only sites on the web and favoritism to one site over another is merely my opinion. Keep Searching.